The interpreter/controller reads a C program and does its actions on the data structures.

How do programming languages apppear?

Language design should follow a methodology, just like software development does. We will learn how to use a meta-language based on namespaces (dictionaries) to design and prototype a programming language.

The processor of a von Neumann machine understands machine language, which can be typed on a keyboard as assembly language. The C language (and its ancestors, CPL, BCPL, and B) are assembly language "dressed up" by assignment equations, subroutines, and array and struct data structures. C is translated to machine language by a compiler. (See the next section.)

But there are other computing machines: there is an "expression machine", called a Lisp machine, that was proposed in the 1960s and built in the 1980s. There is a "stack machine", that was commanded by the language Algol60 and constructed by the Burroughs Company. There are other machines that were designed, some a century or more ago, but never built.

These days, software and language architects regularly design machines and their languages but emulate the machines in software --- the emulation is called a virtual machine. In its simplest form, a virtual machine is one or more data structures and operations that use the structures. The virtual-machine operations are the machine language.

How do we execute programs for a virtual machine? Do we write programs in machine language? Well, we can, but that is tedious and error prone. Instead we invent a "programmer's language" (actually, "programming language") whose constructions correspond to operations in the machine language. An interpreter is a controller program for the virtual machine: it reads a program written in the programming language and executes the corresponding machine-language operations. A good example is the Javascript interpreter, which reads a Javascript program and executes operations on the Java virtual machine ("JVM") embedded within a web browser. Other examples are the implementations of Smalltalk, Scheme, Ruby, and Python.

Many languages, especially those with declarations and static data typing, use a compiler, which reads a program and generates a file of the machine-language operations that the program commands. That is, "An interpeter does the machine operations now; a compiler makes a file of the machine operations for doing later."

Machines, whether virtual or actual, are based on some concept. The von Neumann machine is based on the concept that data is saved in tiny bits, each bit saved in a storage cell, and computation is done by repeatedly examining and altering the data in the cells. The cells are arranged as a long, linear vector. Von Neumann machines are suited for linear algebra, a bit of physics, and some general math, which was fine in the 1940s when they were designed. But computing applies to many more areas these days. That's why the Lisp machine, stack machine, byte-code machine, and many other machines followed. These machines are emulated as virtual machines.

In the 1980s, software architects placed importance on components --- collections of named values. Components communicate with each other by calling each other's names. The component structures look nothing at all like von-Neumann-style storage. What is the virtual machine for an "object-oriented" machine?

In these notes we will learn a meta-language (machine language) that computes on components called namespaces, and we will write interpreters that use namespace meta-language for object-oriented programming languages like Smalltalk and Java.

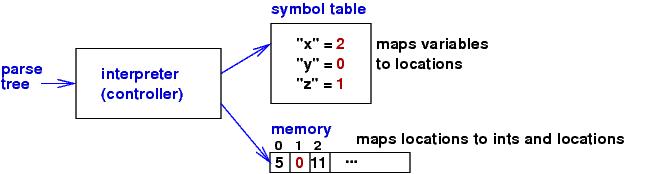

A true "C-virtual-machine" is a von Neumann machine augmented

by a table,

a symbol table, which holds the variable names in the

C program and the storage locations named by the variable

names:

The interpreter/controller reads a C program and does its actions on

the data structures.

C's syntax expresses

the domains and operations of the machine; here is a minimal, "core" version:

===================================================

P : Program

CL : CommandList C : Command

DL : DeclarationList D : Declaration

E : Expression L : LefthandSide

I : Identifier N : Numeral

P ::= DL ; CL

DL ::= D | D ; DL

D ::= declare I

CL ::= C | C ; CL

C ::= L = E | while ( E ) { CL } | if ( E ) { CL1 } else { CL2 }

E ::= N | ( E1 + E2 ) | L | & L

L ::= I | * L

N ::= string of digits

I ::= strings of alphanumerics

===================================================

Assignment, =, manipulates memory, and

operators, * and &, compute on locations.

Symbol-table use happens within declaration and use of

identifiers, I.

The syntax defines C's expressible values to be ints and locations.

The program that created the above storage configuration was

this one:

declare y; declare z; declare x;

y = 5 ;

z = &y ; # store in z's cell the location named by y

x = (6 + *z) # add 6 to the int stored where z points and assign

We might express in the syntax the symbol table and memory themselves. Indeed, C lets you express approximations of both --- a struct is a C-coded "baby symbol table", and an array is a C-coded "baby memory". (We won't develop them here....)

Now, we can enrich the syntax with naming devices --- data types, procedures, functions (or if you are Bjarne Stroustrup, lots more!).

How do we state precisely what the syntax constructions tell the machine to do? There must be some sort of official description! The usual answer is: you write an interpreter, a definitional interpreter, that states the actions taken by each construction. The definitional interpreter itself must be coded in something --- machine language, maybe? Think about how you might write a definitional interpreter for C. How would this differ from a C compiler?

Programming-language history is full of such meta-languages: VDL (for tree machines), lambda-calculus (for rewriting machines), binary-machine code (for von Neumann/Turing machines) are three famous ones. More recently, there are also the object calculus, CCS, CSP, and various logical frameworks (Isabelle/HOL, Coq, LF).

The meta-language we will use in these notes is "namespace algebra". It is a notation for using namespaces, just like ordinary algebra is a notation for using numbers.

A binding is an association between a name

and a meaning.

The name can be referenced later when the

meaning is required

Here is an example, where there

are two bindings that help compute a meaning:

let x = 2 + 1

let y = x + x

x * y

We use the laws of algebra to compute the meaning

of x * y:

===================================================

let x = 2 + 1

let y = x + x

x * y

==> let x = 3 because 2 + 1 = 3

let y = x + x

x * y

==> let x = 3 because x + x = 3 + 3 = 6

let y = 6

x * y

==> 18 because x * y = 3 * 6 = 18

===================================================

x binds to the meaning, 3, and y binds to the

meaning, 6, so that x * y evaluates to the meaning, 18.

Here is a "script" of bindings and expressions to solve:

===================================================

let x = 2 + 1

let y = x + x

x * y

((x + y) * (x * y)) + (2 * (x + y)) + 1

y - 1

===================================================

The script generates two bindings and three integers.

Algebra's syntax and its laws define a meta-language for computing numbers. If we wanted, we can build a machine that understands algebra and does the computation.

As before, a binding, n=v, associates name n with meaning, v. Names are atoms ("strings"), and meanings are primitives: either integers (0, 1, 2, ...) or labels called handles (in the examples, we use Greek letters, α, β, ...). A namespace is a collection of bindings labelled by a handle, e.g. α:{'x'=3, 'y'=β} is a namespace of bindings for 'x' and 'y', labelled by handle α.

We want an algebra for computing on namespaces. Here are the operations of "namespace algebra":

As in ordinary algebra, we write a sequence of bindings and expressions in in namespace algebra. We call the sequence a script. Unlike ordinary algebra, namespace algebra generates meanings and namespaces. Here is an example script and the computation to its meaning:

let d = bind(

bind(alloc{}, 'x', 1),

'y', 2)

let e = alloc{}

bind(d, 'x', e)

bind(e, 'z', find(d, 'x'))

| (no namespaces yet) | |

==> let d = α

let e = alloc{}

bind(d, 'x', e)

bind(e, 'z', find(d, 'x'))

|

and

|

because

alloc{} ==> α and

|

==> let d = α

let e = β

bind(d, 'x', e)

bind(e, 'z', find(d, 'x'))

|

and

|

because

alloc{} ==> β and

|

==> let d = α

let e = β

α

bind(e, 'z', find(d, 'x'))

|

and

|

because

bind(d, 'x', e) = bind(α, 'x', β) ==> α |

==> let d = α

let e = β

β

|

and

|

because

find(d, 'x') = find(α, 'x') ==> β bind(β, 'z', β) ==> β |

Occasionally, we will

abbreviate multiple bindings on a new namespace,

Occasionally, we will

abbreviate multiple bindings on a new namespace,

bind( ...

bind(

bind(alloc{}, n0, v0),

n1, v1),

...,

nm, vm)

by

alloc{n0= v0, ..., nm-1= vm-1, nm= vm}

Finally, it will be useful to have a "choice operation", which we write as if B : S1 else S2, that is, expression S1 or S2 is chosen to evaluate based on the boolean value of B.

(Comment: in ordinary algebra on numbers, we let 0 stand for False and 1 stand for True, so if B: S1 else S2 is really just this arithmetic expression: (B * S1) + (B * S2). The choice operation is coded with gates in circuit theory the same way, where * is an and-gate and + is an or-gate.)

Namespaces appear in semantic models as

|

|

We don't want to build the "heap machine" out of gates and wires; we want to emulate it in software. There are two ways to do this: "top down" and "bottom up". We'll develop these approaches when we study Domain-Specific Programming Languages, and for now, we employ the bottom-up approach, which is particularly easy for the small namespace algebra:

For Step 1, we use Python as the host language, because it has dictionaries as a built-in data structure. Python lets us define functions, and a Python program is a script just like a namespace-algebra script.

To get started, we make

an important observation: a heap is itself a form of namespace that binds handles to namespaces.

Our virtual machine will maintain a variable, heap,

whose value is a dictionary:

===================================================

heap : { Handle : Namespace }

where

Namespace = { Identifier : Meaning }

Meaning = Handle | Int

===================================================

Handles will be modelled as Python strings.

For Step 2,

We write functions to emulate the namespace-algebra operations.

Here are sample Python codings for the heap

and the operations alloc and bind:

===================================================

heap = {} # holds labelled namespaces

heapCount = 0 # how many namespaces stored in the heap

def alloc(d):

"""allocates a new object in the heap.

param d - a dictionary holding bindings to be copied

into the new object

returns - the handle to the new object

"""

newhandle = genHandle() # see just below

heap[newhandle] = {}

for name in d :

heap[newhandle][name] = d[name]

return newhandle

def genHandle() :

"""returns a new handle, a string, hi, where i is an int"""

global heapCount

han = 'h' + str(heapCount)

heapCount = heapCount + 1

return han

def bind(handle, fieldname, meaning):

"""binds fieldname to meaning in the namespace labelled by handle"""

if handle in heap :

heap[handle][fieldname] = meaning

else :

print "bind error: " + handle + " does not exist in the heap"

raise Exception

return handle

def printHeap() :

"""prints the contents of the heap"""

print "heap = {"

hlist = heap.keys()

hlist.sort()

for h in hlist:

print " ", h, ":", heap[h]

print "}"

===================================================

You should write the function thats implement

member and find.

Function printHeap is called to display the contents of

the emulated heap storage.

Say that all these functions are saved in a file,

NamespaceVM.py.

For Step 3, we write a Python script that holds

the algebra expression to compute:

===================================================

from NamespaceVM import * # link to the emulator

# the namespace-algebra script follows:

d = bind(bind(alloc({}), 'x', 1), 'y', 2)

e = alloc({})

bind(d, 'x', e)

bind(e, 'z', find(d, 'x'))

# display the final configuration:

printHeap()

===================================================

This will print:

heap = {

h0 : {'y': 2, 'x': 'h1'}

h1 : {'z': 'h1'}

}

showing that two namespaces were created in the heap,

labelled by handles h0 and h1.

The heap machine for namespace algebra is an excellent starting point: we will define a "programmer's language" for the heap machine's "machine language." The former will be what we call an object-oriented language.

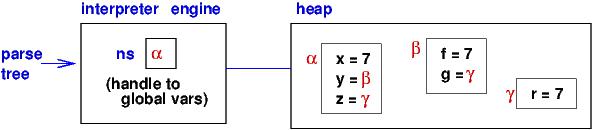

The interpreter for the object-oriented language

will look like this:

The input to the engine is an object-program converted into parse-tree format.

As the engine traverses the tree and reads its contents, it performs

namespace algebra, which constructs namespaces in the heap.

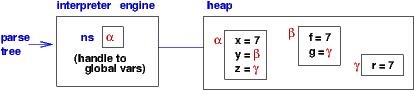

The engine has a register that holds a distinguished handle, named ns, which is the handle to the "active namespace" whose bindings are visible to the command currently being executed. In Java/C#, you would say that the "active namespace" is "this object" or just "this".

Here is the object language whose programs are input to the interpreter:

===================================================

C : Command L : LefthandSide

E : Expression I : Variable

F : FieldName N : Numeral

C ::= L = E | if E : C1 else C2 | while E : C | C1 ; C2

E ::= N | ( E1 + E2 ) | L | new { F }

F ::= I | I , F

L ::= I | L . I | this

N ::= string of digits

I ::= strings of letters, not including keywords

===================================================

Here is a sample object program that plausibly generates the earlier picture:

(We still have to work out the details!)

x = 7;

y = new {f, g};

y.f = x;

y.g = new {r};

z = y.g;

z.r = y.f;

|

|

The syntax for new namespaces (new{I1,I2,...}) is inadequate --- if we construct a new object, we want to initialize its fields.

Here is a simple but perhaps surprising way to do just that:

E ::= . . . | new { C }

The (compound) command C computes bindings that fill a newly

allocated namespace --- C is "constructor code."

(In Java/C#, C would be the code inside the "constructor method".)

Here is an example where there is constructor code inside a new object:

x = 7;

y = new { f = x;

g = new {r = x}; // (*)

};

z = y.g; // assigns the handle, y.g, to z

z.r = y.f + y.g.r + x // assigns 21 to y.g.r's cell

The second command assigns to variable y

the handle of a new namespace and fills the namespace with bindings

for f and g (which is assigned a handle to another

new namespace holding a bindings to r).

How does the machine make these bindings?

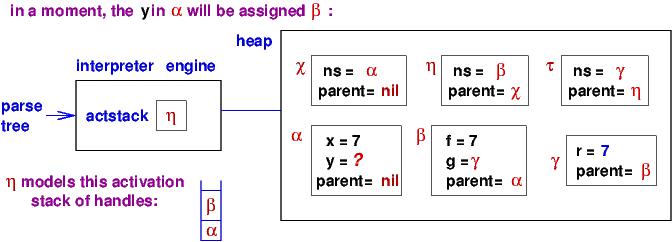

When the program starts, the ns register in the interpreter points to the namespace where global variables, x, y, etc., will be saved. But when y = new {...} is encountered, the machine allocates a new namespace and resets the value of ns to the handle for the new namespace. So, variable f will be stored in the new namespace (and not the namespace where global variables live). And when g = new{...} is encountered, the ns register is reset again, to the handle of the new(est) namespace. At that point in the program, there are three namespaces that are active.

To remember the various values of ns, the machine maintains a stack of the namespaces' handles. The handle at the top of the stack refers to the local namespace. The stack is itself implemented with namespaces --- this is how it's done in Smalltalk, Javascript, Ruby, etc.!

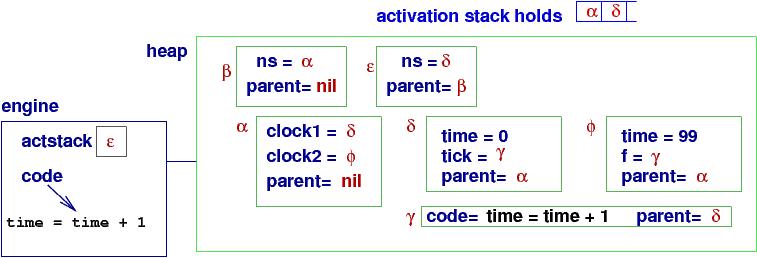

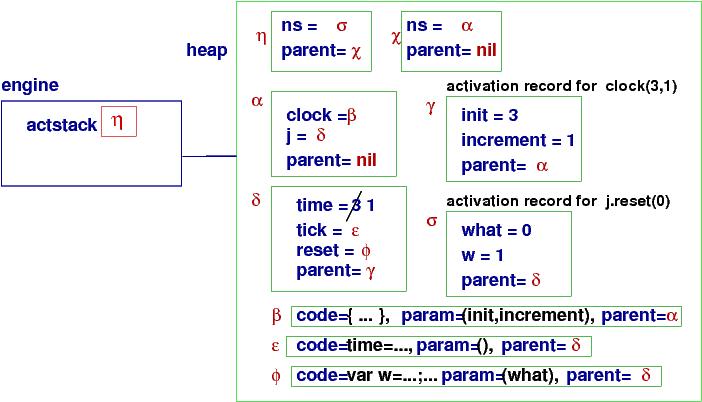

Here is a snapshot of the execution at point (*), just before

the namespace labelled by β is finished and

β is assigned to y:

There is a stack (activation stack) of the active namespaces. In the diagram, the stack is a linked list whose cells possess fields ns and parent. The topmost cell in the activation stack holds the handle of the local namespace that is used for variable lookups. The parent fields chain together the linked list. Register actstack in the engine points to the top of the stack --- to the cell that holds the active value of ns. Once we have multiple active namespaces, we have multiple occurrences of ns!

In addition, parent links are added to all namespaces to indicate where each namespace's nonlocal variables are found. For example, object β's parent is object α (because it is nested within α's namespace). This is how the global x can be found so that the assignments f = x and then r = x can be evaluated.

The semantics of new{C} goes like this:

x = 7;

y = new { f = x;

g = new {r = x};

};

z = y.g;

z.r = y.f + y.g.r + x

There are two forms of variable lookup:

x = 7;

y = new { f = super.x;

g = new {r = super.super.x};

};

z = y.g;

z.r = y.f + y.g.r + this.x

super.x references the x bound in the namespace that

is one level more global than the local one.

this.x references the x bound in the local namespace.

You should think about

how to implement

this and super with the ns and parent links

in the heap machine. (Later, this and super take on

a slightly different meaning.)

Java, C#, and C use outside-in lookup, and they limit inside-out lookup to just "local and one-level up". Python has both inside-out and outside-in lookup.

The example object language we will develop in these notes contains both inside-out and outside-in lookup.

Consider this example:

x = 7;

y = new {z = x; x = 0};

x = x + y.z

Is variable z stored in y's namespace or in the global namespace?

Does x = 0 reset global x or create a second x in y's namespace?

We must remove this confusion.

There are several ways to do so, but the simplest

is to add

a new keyword, var,

which we use to create a new binding

in the local namespace. We rewrite the example like this:

var x = 7;

var y = new {var z = x; x = 0};

x = x + y.z

Now it is clear that

variables x and y are bound in the "global" namespace,

variable

z is bound in y's namespace, and "global" x is updated to 0.

var I = E is a declaration of a new binding, and L = E is an assignment to an existing binding.

===================================================

P : Program

C : Command E : Expression

T : Template L : Lefthandside

P ::= T

C ::= var I = E | L = E | C1 ; C2 | while E : C end

E ::= N | ( E1 + E2 ) | L | new T

T ::= { C }

L ::= I | L . I | this

===================================================

we write a semantics that translates each syntax construction

into a script of namespace

algebra. The script explains exactly what each construction means.

There are two notions to be worked out before we study the semantics.

What if L is an array expression? The assignment, A[I] = E computes A to the base address of the array, and I computes to an offset or index within the array. The pair, (base, offset), are then computed to a storage cell location where the meaning of E is deposited.

If L is a struct expression, then S.I = E computes S to a base address and I computes to an offset; the pair, (base, offset), are computed to a location where the meaning of E is deposited.

Data-structure computation uses a base+offset pair to state the coordinates where an update should be made. The pair is called an L-value ("left-hand-side value").

Arrays and structs are data structures, and so are namespaces. In the object language, the semantics of assignment, L = E, uses L-values as follows:

var x = 7;

var y = new { var f = x };

x = y.f;

An inside-out lookup for x searches the static chain

for the namespace labelled h that holds x's binding.

The L-value is (h, 'x').

An outside-in lookup of y.f computes the handle, g,

named by y so that the L-value is (g, 'f').

The L-values are used to do find and bind operations.

We model the

activation stack by a linked list of namespaces, like we saw in the diagrams.

This requires a register (variable) in the interpreter

that remembers the top of the stack:

===================================================

actstack : Handle # remembers the handle to the currently active namespace

===================================================

We define the usual operations on a linked-list stack whose cells

have the format, {'ns'= Handle, 'parent'= Handle}.

We define the functions with namespace-algebra notation.

===================================================

### make the namespace labelled h the active namespace:

let push(h) == let newtop = alloc{'ns'= h, 'parent'= actstack}

let actstack = newtop

### forget the active namespace:

let pop() == let actstack = find(actstack, 'parent')

### retrieve the handle of the active namespace:

let top() == if actstack == nil : nil

else : find(actstack, 'ns')

===================================================

Here is a namespace-algebra script that uses the functions

to generate the

updates demanded by the program,

var x = 7; var y = new {var f = 8}; y.f = 99. Starting with an empty

stack, it allocates a namespace to hold global variables, then

declares x in it, and constructs a second namespace whose handle

is y's value so that y is declared too:

let actstack = nil

push(alloc{}) # namespace for global vars

bind(top(), 'x', 7) # declare var x = 7 in global ns

let newhandle = alloc{} # allocate namespace for y

push(newhandle) # make the new namespace the active one

bind(top(), 'f', 8) # var f = 8 in new namespace

pop() # now that the new object is initialized, it is no longer active

bind(top(), 'y', newhandle) # var y = ... in global ns

bind(find(top(), y), 'f', 99) # y.f = 99

The script shows a pattern of pushing and popping handles so that declarations use the top handle of the activation stack. The pattern is formalized in the semantics definition of the source programming language.

let f(x) == E

... f(E') ... ==> ... f(m') ... where m' is E's meaning

==> ... [m'/x]E ... where [m'/x]E is E with

all occurrences of x

replaced by m'

That is, first compute the meaning of the call's argument and then replace the call by

the function's body, where the argument's meaning

replaces the parameter variable.

Example:

===================================================

let actstack = nil

push(alloc{})

FIRST, COMPUTE THE ARGUMENT'S MEANING:

==> let actstack = nil

push(h0) and h0:{}

SECOND, REPLACE THE CALL BY THE FUNCTION'S BODY:

==> let actstack = nil

let newtop = alloc{'ns'= h0, 'parent'= actstack}

let actstack = newtop

NOW, COMPUTE THE MEANING OF THE BODY:

= let actstack = nil

let newtop = bind(bind(alloc(),

'ns', h0),

'parent', actstack)

let actstack = newtop

= let actstack = nil

let newtop = bind(bind(alloc(),

'ns', h0),

'parent', nil)

let actstack = newtop

==> let actstack = nil and h0:{}

let newtop = bind(bind(h1, 'ns', h0), h1:{}

'parent', nil, h1))

let actstack = newtop

==> let actstack = nil and h0:{}

let newtop = bind(h1, 'parent', nil) h1:{'ns'= h0}

let actstack = newtop

==> let actstack = nil and h0:{}

let newtop = h1 h1:{'ns'= h0, 'parent'= nil}

let actstack = newtop

==> let actstack = nil and h0:{}

let newtop = h1 h1:{'ns'= h0, 'parent'= nil}

let actstack = h1

===================================================

At this point, there are two namespaces and actstack means h1.

Finally,

here are the semantics equations. They

build namespaces in the heap and

maintain the register, actstack, which points to the top of the

linked-list activation stack:

===================================================

Virtual-machine data structures:

heap : { Handle : Namespace }

where Namespace = { Identifier : Denotable }

Denotable = Handle | Integer

LValue = Handle * Identifier

actstack : Handle

Program[[.]] updates actstack, heap:

[[ T ]] == let actstack = nil

Template[[ T ]]

Command[[.]] updates actstack, heap:

[[ var I = E ]] == bind(top(), I, Expression[[ E ]])

[[ L = E ]] == bind(Lefthandside[[ L ]], Expression[[ E ]])

[[ while E : C end ]] == if Expression[[ E ]] != 0 :

Command[[ C ]]

Command[[ while E : C end ]]

else : (skip)

[[ C1 ; C2 ]] == Command[[ C1 ]]

Command[[ C2 ]]

Expression[[.]] updates actstack, heap and returns Denotable:

[[ N ]] == int(N)

[[ E1 + E2 ]] == Expression[[ E1 ]] + Expression[[ E2 ]]

[[ L ]] == find Lefthandside[[ L ]]

[[ new T ]] == Template[[ T ]]

Template[[.]] updates actstack, heap and returns Handle:

[[ { C } ]] == let newhandle = alloc{'parent' = top()}

push(newhandle)

Command[[ C ]]

pop()

newhandle

Lefthandside[[.]] returns LValue:

[[ I ]] == searchStatic(top(), I)

where searchStatic(thishandle, I) ==

if thishandle != nil :

if member(I, thishandle) :

(thishandle, I)

else : searchStatic(find(thishandle, 'parent'), I)

else : raise Exception

[[ L . I ]] == let han = find Lefthandside[[ L ]]

if member(I, han) :

(han, I)

else : raise Exception

[[ this ]] == (actstack, 'ns')

===================================================

Command[[ L = E ]] == bind(Lefthandside[[ L ]], Expression[[ E ]])the phrase, Lefthandside[[L]], computes to an L-value --- a pair, (handle, id), and Expression[[E]] computes to an expressible value --- an int or a handle, m. The phrase, bind((handle, id), m), is treated as the same as bind(handle, id, m) for simplicity.

var ob = new {var f = 1};

var save = this;

this = ob;

f = 2;

this = save;

We can disallow such activity by restricting the syntax of Lefthandsides:

L ::= I | this . I | L . I

The semantics shows the precise actions a program makes on a heap.

Here is a completely worked example, where the program,

{var x = 7; var y = new {var f = x}}, is expanded into its script

of namespace-algebra performed on the heap machine.

The expanded program appears on the left and the values of the heap and

stack pointer appear on the right:

===================================================

ABBREVIATIONS:

T0 == { var x = 7; var y = new {var f = x} }

C0 == var x = 7

C1 == var y = new T1

T1 == {var f = x}

SCRIPT: DATA STRUCTURES:

--------------------- ---------------------

Program[[ T0 ]]

heap = {}

== let actstack = nil

Template[[ T0 ]]

heap == {}

actstack == nil

== Template[[ T0 ]]

== let newhandle = allocate{'parent'= top()}

push(newhandle)

Command[[ C0; C1 ]]

pop()

newhandle

heap == { h0 = {'parent'=nil} }

actstack == nil

== let newhandle = h0

push(h0)

Command[[ C0; C1 ]]

pop()

h0

heap == { h0 = {'parent'=nil},

h1 = {'ns'=h0, 'parent'=nil} }

actstack == h1

== Command[[ C0; C1 ]]

pop()

h0

== Command[[ var x = 7 ]]

Command[[ C1 ]]

pop()

h0

== bind(top(), 'x', Expression[[ 7 ]])

Command[[ C1 ]]; pop(); h0

== bind(h0, 'x', 7)

Command[[ C1 ]]; pop(); h0

heap == { h0 = {'x'=7, 'parent'=nil},

h1 = {'ns'=h0, 'parent'=nil} }

actstack == h1

== Command[[ var y = new T1 ]]

pop(); h0

== bind(top(), 'y', Expression[[ new T1 ]])

pop(); h0

== bind(h0, 'y', Template[[ {var f = x} ]])

pop(); h0

== bind(h0, 'y', (let newhan = allocate({'parent' = top()})

push(newhan)

Command[[ var f = x ]]

pop()

newhan))

pop(); h0

heap == { h0 = {'x'=7, 'parent'=nil},

h1 = {'ns'=h0, 'parent'=nil},

h2 = {'parent'=h0},

h3 = {'ns'=h2, 'parent'=h1}

}

actstack == h3

== bind(h0, 'y', (Command[[ var f = x ]]; pop(); h2))

pop(); h0

== bind(h0, 'y', (bind(h2, 'f', Expression[[ x ]]); pop(); h2))

pop(); h0

== bind(h0, 'y', (bind(h2, 'f', find Lefthandside[[ x ]]);

pop(); h2))

pop(); h0

== bind(h0, 'y', (bind(h2, 'f', find(h0,'x')); pop(); h2))

pop(); h0

== bind(h0, 'y', (bind(h2, 'f', 7); pop(); h2))

pop(); h0

heap == { h0 = {'x'=7, 'parent'=nil},

h1 = {'ns'=h0, 'parent'=nil},

h2 = {'f'=7, 'parent'=h0},

h3 = {'ns'=h2, 'parent'=h1}

}

actstack == h3

== bind(h0, 'y', (h2; pop(); h2))

pop(); h0

heap == { h0 = {'x'=7, 'parent'=nil},

h1 = {'ns'=h0, 'parent'=nil},

h2 = {'f'=7, 'parent'=h0},

h3 = {'ns'=h2, 'parent'=h1}

}

actstack == h1

== bind(h0, 'y', h2 )

pop(); h0

heap == { h0 = {'y'=h2, 'x'=7, 'parent'=nil},

h1 = {'ns'=h0, 'parent'=nil},

h2 = {'f'=7, 'parent'=h0},

h3 = {'ns'=h2, 'parent'=h1}

}

actstack == h1

== pop(); h0

heap == { h0 = {'y'=h2, 'x'=7, 'parent'=nil},

h1 = {'ns'=h0, 'parent'=nil},

h2 = {'f'=7, 'parent'=h0},

h3 = {'ns'=h2, 'parent'=h1}

}

actstack == nil

== h0

===================================================

It is an easy job reformatting the semantics definition into a protoype computer implementation.

(This is supposed to be how one designs a language --- write a semantics first and implement it second!)

===================================================

ABBREVIATIONS:

T0 == { var x = 7; var y = new {var f = x} }

C0 == var x = 7

C1 == var y = new T1

T1 == {var f = x}

TRANSLATIONS OF PHRASES:

Program[[ T0 ]] == let actstack = nil

Template[[ T0 ]]

Template[[ T0 ]] == let newhandle = alloc{'parent' = top()}

push(newhandle)

Command[[ C0 ]]

Command[[ C1 ]]

pop()

newhandle

Command[[ C0 ]] == bind(top(), 'x', Expression[[ 7 ]])

== bind(top(), 'x', int(7))

Command[[ C1 ]] == bind(top(), 'y', Expression[[ new T1 ]])

Expression[[ new T1 ]] == Template[[ T ]]

Template[[ {var f = x} ]] == let newhandle = alloc{'parent' = top()}

push(newhandle)

Command[[ var f = x ]]

pop()

newhandle

Command[[ var f = x ]] == bind(top(),'f', Expression[[ x ]])

Expression[[ x ]] == find Lefthandside[[ x ]]

== find searchStatic(top(),'x')

ASSEMBLED 'TARGET CODE':

let actstack = nil

let newhandle = alloc{'parent' = top()}

push(newhandle)

bind(searchStatic(top(),'x'), int(7))

bind(searchStatic(top(),'y'),

(let newhandle = alloc{'parent' = top()}

push(newhandle)

bind(top(),'f', find searchStatic(top(),'x'))

pop()

newhandle))

pop()

newhandle

===================================================

If we were serious about implementing a compiler for this particular

language, we would devise translations of the operations into

machine language, and we would do some internal optimizations of the

``target code.'' (A typical optimization is the computation of the

searchStatic operations into L-values; this is what happens in a Java

or C# compiler, which precomputes the storage demands of allocated objects.)

Finally, we would unwind nested function calls into the style of ``flat code''

that is typical of machine language.

T ::= { C } | array [ N ] of E

L ::= I | L . I | L [ E ]

Here is a sample program,

var x = 3;

var r = new array[4] of new {var f = 0};

r[x].f = x

which constructs an array of four objects.

The semantics of array declaration goes like this:

Template[[ array [ N ] of E ]] == allocArray(0, int(N), [[ E ]], alloc{})

allocArray(index, size, initcode, arrayhandle) ==

if index == size :

arrayhandle # finished

else :

allocArray(index + 1, size, code,

bind(arrayhandle, index, Expression initcode))

Write the semantics of array indexing, Lefthandside[[ L[E] ]]. How does it differ

from L.I's semantics?

Calculate the semantics of the above program.

===================================================

P ::= T

C ::= var I = E | L = E | C1 ; C2

E ::= N | ( E1 + E2 ) | L | new T

T ::= { C }

L ::= I | L . I | this

===================================================

because the core computational abilities are fixed.

Robert Tennent proposed these principles for adding naming constructions to a language core:

===================================================

P : Program

C : Command D : Declaration

T : Template E : Expression

L : Lefthandside N : Numeral I : Variable

P ::= T

D ::= proc I : C end | class I : T | var I = E

C ::= L = E | C1 ; C2 | D | L ( )

E ::= N | ( E1 + E2 ) | L | new T

T ::= { C } | L ( )

L ::= I | L . I | this

===================================================

# Example 1:

var x = 7;

proc p: x = x + 1 end;

var y = new {var x = 2; p()}

When p() is called within the new object, p's body should likely update

the global x and not the one in the new object. (But an argument might

be made that the local x is incremented --- what do you think?)

# Example 2:

var clock = new {var time = 0;

proc tick(): time = time + 1 end};

var time = 99;

clock.tick()

The call, clock.tick(), should almost certainly

increment the local variable time that

resides in the object with procedure tick (and not the global

time that is declared separately from tick).

# Example 3:

var clock1 = new {var time = 0;

proc tick(): time = time + 1 end};

var clock2 = new {var time = 99;

var f = -1};

clock2.f = clock1.tick;

clock2.f()

Field f in object clock2 is assigned (a handle to) clock1's tick

procedure. When f is called (clock2.f()), this executes

tick in clock1, which should almost certainly

increment the time in clock1 (and not time in clock2).

There are three possible semantics to procedure declaration and call:

To implement static scoping, when p is declared, p is bound to the handle of a closure that contains both p's body and the handle to the active namespace at the time p is declared. The namespace that holds the command and the handle is called a closure.

The invocation, p(), causes a lookup of p to find the closure. The handle saved in the closure is pushed onto the activation stack, making that namespace the active one, and p's command is executed. At conclusion, the handle is popped from the activation stack.

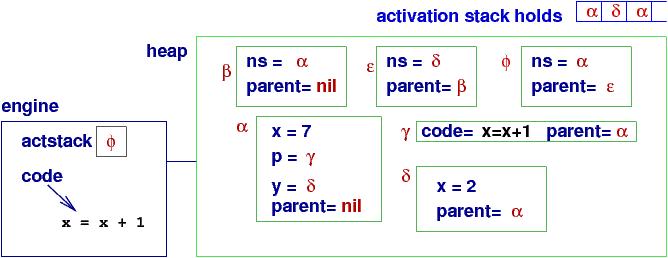

Here is a diagram that shows the execution of Example 1, where

p has been called, and its body, x = x + 1, is about to execute:

This is how static scoping is implemented in Algol60, Pascal, Ada, Modula, Python, Scheme, and almost all procedural, modular languages.

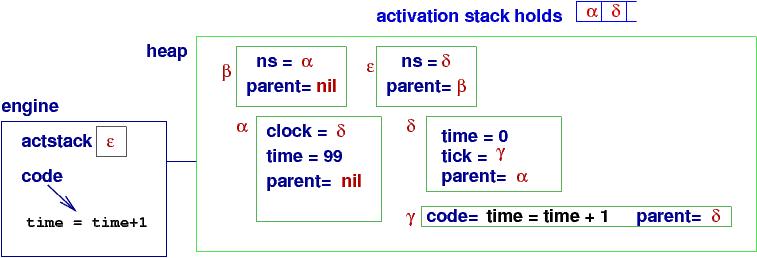

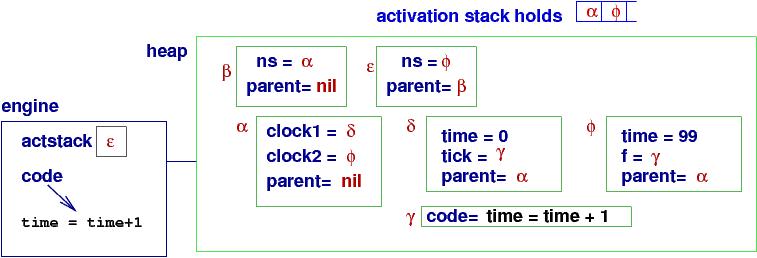

It also nicely handles object structure; here is a diagram of

Example 2 where, clock.tick() is called and its time = time + 1 is

about to execute:

Again, since handle δ was saved in tick's closure, it can be

pushed onto the activation stack so that clock's time variable is

correctly updated.

Finally, since a closure is labelled by a handle, the handle can be assigned

to a variable, and Example 3 can operate as expected.

Here is a diagram of the machine state at the execution of the code body

generated by clock2.f():

Since the closure remembered the handle to the namespace it should use,

the call will update the correct time.

The delegate object in C# is exactly a closure that operates

as shown here.

First, a declaration, proc I: C end, binds I to a closure that holds just C; no handle is saved in the closure. When L() is called, L evaluates to some L-value, a pair of form, (θ, I), where θ is of course the namespace where I is bound to its closure. θ itself is pushed onto the activation stack. Then, the closure bound to I in namespace θ is fetched and its command extracted and executed, using θ's namespace as the active one, the source of its variables.

For Example 1, the execution looks almost exactly the same:

but the closure holds no handle.

Example 2 also computes the same way as before.

But Example 3 behaves differently, because the L-value computed

for clock2.f is (φ, 'f'),

which forces the code, time = time + 1, to update clock2's time:

Virtual scoping is used in Smalltalk and Java as the default.

(Java disallows Example 3, by the way!) C# uses both static and virtual

scoping for methods (to obtain the latter, use the keywork, virtual).

Python uses static scoping for procedures and virtual scoping for

procedures/methods declared within classes.

That is, upon declaration, a closure holding only code is saved, as in virtual scoping. Upon call, the called procedure's body executes with whatever namespace is active at the position when the call appears. (Unlike static and virtual scoping, there is no handle pushed and popped.) In Example 1, the call to p updates the x declared in the inner object. In Example 2, the global time increments, and in Example 3, the time within clock2 increments.

Dynamic scoping is implemented in the original version of Lisp. (By the way, the first implementations of Smalltalk were Lisp-coded interpreters, which is how virtual scoping was developed.)

A class is declared like a procedure and it is called in the same fashion:

class clock : { var time = 0;

proc tick : time = time + 1 end};

var j = new clock();

var k = new clock();

k.tick()

new clock() is a "class call", which means that the binding for clock

is found --- It's a handle to a closure holding the template that allocates

time and tick.

The template executes like the body of a procedure executes.

As with procedures, a class might be used with static,

virtual, or dynamic scoping. (In fact, C#/Java make it difficult, if

not impossible, for a class definition to even reference a global variable,

so the choice of scoping rarely matters!)

Here is a picture of the runtime configuration of the above

example just before the call, k.tick(), returns, assuming static scoping of classes

and procedures:

The two calls, clock(), constructed two namespaces, and k.tick()

used the one named by k.

IMPORTANT: No new computational machinery is added to the model. This is the beauty in Tennent's approach --- it exploits the computational abilities already in place.

The semantics of both command and template abstracts

follow a standard format.

Here, we define static-scoping semantics. (You should

write the semantics for virtual and dynamic scoping.)

===================================================

Declaration[[ proc I : C ]] ==

bind(top(), I, alloc{'type'= 'proc', 'code'= [[ C ]], 'parent'= top()})

Declaration[[ class I : T ]] ==

bind(top(), I, alloc{'type'= 'class', 'code'= [[ T ]], 'parent'= top()})

### findClosure(label, lvalue) extracts the closure bound at lvalue,

### checks that its type == label,

### and returns the closure's code and the handle to its global variables.

findClosure(label, (handle,name)) ==

let clhandle = find (handle,name)

if label == find(clhandle, 'type') :

(find(clhandle, 'code'), find(clhandle, 'parent'))

else : raise Exception

Command[[ L() ]] ==

let (code, parentlink) = findClosure('proc', Lefthandside[[ L ]])

push(parentlink)

Command code

pop()

Template[[ L() ]] ==

let (code, parentlink) = findClosure('class', Lefthandside[[ L ]])

push(parentlink)

let newhandle = Template code

pop()

newhandle

===================================================

var x = 0; proc p: var y = x; x = x + y end; p() # at this point, is variable y bound in the global namespace?Should procedure p be allowed to add variable y to the global namespace? The semantics above allows this. Most all modern languages do not --- instead, y is bound in some separate, local namespace that is created for the private use of p's body. In the next section, we study how to implement a local namespace for procedure calls.

Let's add expression parameters to procedures and classes:

===================================================

D ::= proc I1 ( I2 ) : C end | class I1 ( I2 ) : T

C ::= . . . | L ( E )

T ::= . . . | L ( E )

===================================================

When proc I1(I2): C end is declared, a closure is constructed

to hold parameter I2, command C, and the active namespace's handle,

which serves as the handle to find I1's

nonlocal variables.

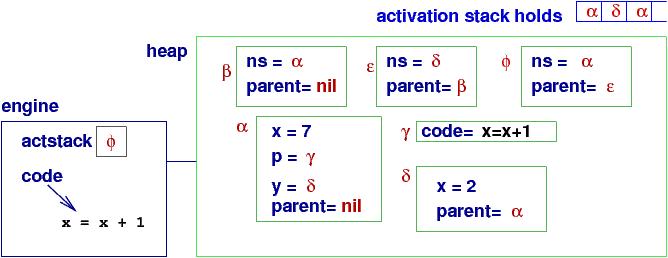

When the call, L(E), appears, L is evaluated to the L-value that leads to I1's closure; E is evaluated to its meaning, m; a new namespace (activation record) is allocated to hold the binding, I2 = m, along with the handle extracted from the closure. The handle of the activation record is pushed onto the activation stack, C executes, and the stack is popped at conclusion.

Here is a small example that shows how the activation record is

created when a procedure is called:

var time = 0;

proc tock(n):

var m = 2;

time = time + (m + n) # (*)

end;

tock(3)

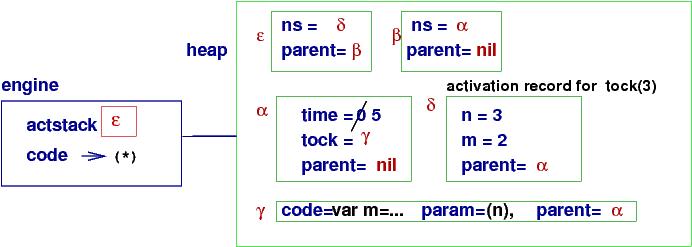

At point (*), just before the call to tock finishes, the configuration

looks like this:

At this point, the active namespace is δ, tock's activation record.

tock's global variables are found from (δ, 'parent'), that is,

at the namespace labelled

α,

When the call finishes, the stack pops, and the active namespace

reverts to α.

Here is a second example, a parameterized class:

class clock(init, increment): { var time = init;

proc tick():

time = time + increment;

proc reset(what):

var w = what + increment;

time = w #(**)

}

var j = new clock(3, 1);

j.reset(0)

The semantics of class declaration and invocation work the same as a procedure's.

Here is

a snapshot of this program, at (**), just before j.reset(0) returns:

The static chain for the call j.reset(0) is the sequence of parent links

starting from σ.

Notice the namespace that retains the values of init and increment,

which were bound when the object was constructed and must be saved for

the use of tick and reset.

Java/C# do not allow parameters to classes (instead, they use

parameterized constructor methods), but it is no problem to

implement them.

Again, no new semantic machinery is required.

Here is the

semantics for parameterized procedures.

===================================================

Declaration[[ proc I1 ( I2 ) : C ]]

== bind(top(), I1, alloc{'type' = 'proc, 'param'= I2,

'code'= [[ C ]], 'parent'= top()} )

### returns a tuple of the parameter name, command, and static link:

findClosure(label, (handle,name)) ==

let clhandle = find (handle,name)

if label == find(clhandle, 'type') :

(find(clhandle, 'param'), find(clhandle, 'code'), find(clhandle, 'parent'))

else : raise Exception

Command[[ L ( E ) ]] ==

let (paramname, code, parentlink) = findClosure('proc', Lefthandside[[ L ]])

push(alloc{paramname = Expression[[ E ]], 'parent' = parentlink})

Command code

pop()

===================================================

You should write a similar

semantics for a parameterized class.

begin D in C endThe semantics of the command block goes like this: a new namespace is allocated, into which D's bindings are placed; C uses the namespace, after which the namespace is deactivated:

For commands, the semantics looks like this:

Command[[ begin D in C end ]] == push(alloc{'parent' = top()})

Declaration[[ D ]]

Command[[ C ]]

pop()

The semantics ensures that declarations, D, as well as any

declarations constructed within C are deposited in a local

namespace. This ensures privacy --- no code outside of the

begin ... end can directly reference the bindings made in the block.

The format works especially well for templates, and it is the origin

of ``private fields'' in classes:

===================================================

T ::= { C } | private D in T | L ( E )

Template[[ private D in T ]] == push(alloc{'parent' = top()})

Declaration[[ D ]]

let han = Template[[ T ]]

pop()

han

===================================================

Example:

var clock = new private var time = 0 in

{ proc tick(n): time = time + n end }

clock.tick(1) # time cannot be referenced directly here

No new semantic machinery is required.

Other phrase forms can use blocks, but

modern languages often marry blocks

to parameterized abstracts, so that the private declarations

are treated like extra parameter bindings. This approach works

fine for straightforward examples, like this one:

class clock(init, increment)

private var time = init :

{ proc tick(): time = time + increment end;

proc reset(what): time = what end

}

An activation, like var c = new clock(0,2),

would construct a two-namespace object, where one namespace

holds init = 0, increment = 2,

and time = 0, and another holds the handles to the closures named by

tick and reset.

The example establishes a correspondence to this code fragment,

which uses a template-block:

var c = new private var init = 0; var increment = 2; var time = init in

{ proc tick(): time = time + increment end;

proc reset(what): time = what end

}

Tennent remarked there should be a ``correspondence principle''

in the semantics between declaration and parameter binding --- the semantical

effects should be the same.

It is easy to modify the semantics of parameterized abstracts to store private declarations: For the format, class I1 (I2) private D : T, the semantics of the class declaration is to store within a closure all of I2, D, and T. The semantics of the class's call, L(E), is to locate the closure named by L's L-value, allocate a new namespace, insert into it I2 bound to E's value, push the namespace, evaluate D (its bindings will be deposited into the new namespace), and then evaluate T (which allocates a namespace for the ``public fields'' of the object. At conclusion, the namespaces are popped from the activation stack.

D ::= M var I = E | M proc I1 ( I2 ) : C end | ... M ::= private | publicA private declaration cannot be indexed from outside its own namespace, that is, only inside-out lookup works --- outside-in lookup, that is, L.I dot-notation, is not allowed to reference a binding labelled private.

This approach is typically implemented by a compiler, e.g., the Java compiler checks in advance, during its type-checking phase, that no private-labelled field is indexed outside-in.

As an exercise, you should define the semantics of the private-public labels and the Lefthandside semantics of lookup.

The main advantage of combining private and public fields in the same namespace is the increased utility of the this pronoun:

In the core language, there was a

pronoun, this, and its meaning was the active namespace, which

at that time coincided

with the notion of ``this object.'' Now that called procedures

generate activation records and now that objects with private declarations

can be allocated as compound

namespaces, the correspondence between "active namespace" and "this object"

breaks down.

Here is a use of this in an object-oriented language:

class C() : { var f = 0;

proc p(f) : this.f = f end };

var ob = new C();

ob.p(3)

Say that new C() generates a namespace whose handle is α and

holds the binding, 'f' = 0.

Then the call, ob.p(3), generates a namespace whose handle is

β that holds the parameter binding,

'f' = 3. The active namespace is β.

The command, this.f = f, assigns 3 (the binding found at

(β, 'f')) to the f whose L-value is (α, 'f').

Why does this happen? A typical object-oriented language uses virtual scoping for procedure (method) calls, and this is determined by the L-value computed by virtual scoping.

The call, ob.p(3) proceeds like this:

We already studied the semantics of virtual scoping. To employ it now, we must enforce this one significant restriction to the programming language:

var c = new { var x = 1;

proc p(x): this.x = x end

};

var d = new { var x = 2;

var f = c.p # ILLEGAL --- c.p is NOT a legal expression

};

d.f(99)

The object language we are developing is Java-like. (Recall that Python allows both static scoping and virtual scoping, so the above example is allowed in Python.) As an exercise, consider how to use the two forms of closures, for static and for virtual scoping, in the same language. Write the semantics definition of procedure call to check which form of procedure closure is called. This is how it's done in C# and Python.

Here is how to define virtual-scoping/this for procedure calls but

allow inside-out variable lookup to execute with static scoping.

This essentially (but not exactly!) matches Java's semantics:

===================================================

### same as before:

Declaration[[ proc I1 ( I2 ) : C ]]

== bind(top(), I1, alloc{'type' = 'proc, 'param'= I2,

'code'= [[ C ]], 'parent'= top()} )

### same as before:

findClosure(label, (handle,name)) ==

let clhandle = find lvalue

if label == find(clhandle, 'type') :

(find(clhandle, 'param'), find(clhandle, 'code'), find(clhandle, 'parent'))

else : raise Exception

### modify this equation:

Command[[ L ( E ) ]] ==

let (thishandle, id) = Lefthandside[[ L ]]

let (paramname, code, parentlink) = findClosure('proc', thishandle, id)

push(alloc{paramname = Expression[[ E ]], 'this' = thishandle,

'parent' = parentlink})

Command code

pop()

### modify this equation:

Expression[[ L ]] == let val = find Lefthandside[[ L ]]

if notHandleToClosure(val) : val

else : raise Exception

Lefthandside[[ I ]] == ...as before...

Lefthandside[[ L . I ]] == ...as before...

Lefthandside[[ this ]] == if member('this', top()) :

(top(), 'this')

else : raise Exception # OR, (top(), 'ns')

===================================================

When subclasses arrive, the semantics shown for L(E) and for L.I change once more.

class Clock() : private var time = 0 in

{ proc tick(): time = time + 1 end;

proc reset(time): this.time = time end # the problem is here

}

var c = new Clock();

c.tick();

c.reset(99);

Explain why the error disappears if the private variable is saved in the

same namespace with the public methods.

(Hint: reread the end of the previous section, about public-private labelling.)

Tennent's extension principles do not yield subclasses.

Indeed, subclassing is an operation on templates, independent

from abstracts, parameters, and blocks. In its most general form,

it is a ``template append'' operation:

T ::= { C } | L ( E ) | T1 + T2

This form is called a mix-in, and it would

be used like this:

class Point(a,b) : { var x = a; var y = b;

proc paint() : ...x...y...a...b... end;

proc display() : this.paint() end

};

class Color(c) : { var color = c;

proc paint(...) : super.paint(); ...color... end

};

class ColoredPoint(x,y,c) : Point(x,y) + Color(c);

var RGB = 999999;

var p = new ColoredPoint(0,0,RGB)

We will undertake a more modest version of the append operation,

one that looks like Java:

===================================================

D ::= ... | class I1 ( I2 ) : T | var I = E

E ::= ... | new T

T ::= { C } | L ( E ) | extends T with { C }

L ::= this | super | I | L . I

===================================================

Here is an example in the more modest syntax:

class Point(a,b) : { var x = a; var y = b;

proc paint() : ...x...y...a...b... end;

proc display() : this.paint() end

};

class ColoredPoint(m,n,c) : extends Point(m,n) with

{ var color = c;

proc paint(...) : super.paint(); ...color... end

};

var p1 = new Point(0,0);

var p2 = new ColoredPoint(9,88,777)

Object p1 will be modelled by two

namespaces, one holding bindings for a and b, and one holding

x, y, paint, and display.

Similarly, p2 will be a four-namespace object.

All of p2.paint, p2.display,

p2.color, p2.x, and p2.y are well-defined L-values, and

we must alter the semantics of L.I to ensure this is so.

And there is the notion of ``superobject'' (``superclass''), as used in super.paint() --- p2's paint method will call the paint method in the ``superobject part'' of p2.

Finally, there is virtual-method override at work: the call, p2.display(), invokes the method display in Point, whose call, this.paint(), activates the paint method in ColoredPoint (whereas, p1.display() activates paint in Point).

All these issues will be handled by a new linkage, implemented by a new field, 'super', which links subclass-namespaces to superclass-namespaces.

Now, there are two steps involved in computing an L-value like p2.display:

Here is the relevant

fragment of the language:

===================================================

D ::= proc I1 ( I2 ) : C | class I1 ( I2 ) : T | var I = E

C ::= L = E | D | | L ( E )

E ::= L | new T

T ::= { C } | L ( E ) | private D in T | extends T with { C }

L ::= I | L . I | this | super

===================================================

As always, a program constructs a heap of namespaces

and manages a linked-list

activation stack that is pointed to by actstack.

An abstract (procedure/method) call is handled differently whether it

has form L.I(E) or L(E), so we define an auxilary function for

handling calls:

===================================================

Program[[.]] updates heap:

[[ T ]] == let actstack = nil

Template[[ T ]]

Declaration[[.]] updates heap

[[ var I = E ]] == bind(top(), I, Expression[[ E ]])

[[ proc I1 ( I2 ) : C ]] ==

bind(top(), I1, alloc{'type' = 'proc', 'param' = I2,

'code' = [[ C ]], 'parent' = top()})

[[ class I1 ( I2 ) : T ]] ==

bind(top(), I1), alloc{'type' = 'class', 'param' = I2,

'code' = [[ T ]], 'parent' = top()})

Command[[.]] updates heap:

[[ D ]] == Declaration[[ D ]]

[[ L = E ]] == bind(Lefthandside[[ L ]], Expression[[ E ]])

[[ L ( E ) ]] == let (han, paramname, code) = callClosure('proc', [[ L ]])

bind(han, paramname, Expression[[ E ]])

push(han)

Command code

pop()

### callClosure(label, L) locates the closure named by L,

### constructs an activation record for its call, and returns a tuple holding

### the handle to the activation and the closure's paramname and code

callClosure(label, [[ I ]]) ==

let clhandle = find Lefthandside[[ I ]]

if label == find(clhandle, 'type') :

(alloc{'parent' = find(clhandle, 'parent')},

find(clhandle, 'param'),

find(clhandle, 'code'))

else : raise Exception

callClosure(label, [[ L . I ]]) ==

let thishandle = find Lefthandside[[ L ]]

let clhandle = find Lefthandside[[ L . I ]]

if label == find(clhandle, 'type') :

(alloc{'parent' = find(clhandle, 'parent'), 'this' = thishandle},

find(clhandle, 'param'),

find(clhandle, 'code'))

else : raise Exception

Expression[[.]] updates heap returns Denotable:

[[ L ]] == let v = find Lefthandside[[ L ]]

if notHandleToClosure(v) : v

else : raise Exception

[[ new T ]] == Template[[ T ]]

Template[[.]] updates heap and returns Handle:

[[ { C } ]] == newhandle = alloc{'parent' = top(), 'super' = nil}

push(newhandle)

Command[[ C ]]

pop()

newhandle

[[ private D in T ]] == push(alloc{'parent' = top()})

Declaration[[ D ]]

let han2 = Template[[ T ]]

pop()

han2

[[ extends T with { C } ]] == let han1 = Template[[ T ]]

let han2 = alloc{'parent' = top(), 'super' = han1}

push(han2)

Command[[ C ]]

pop()

han2

[[ L ( E ) ]] == let (han, paramname, code) = callClosure('class', [[ L ]])

bind(han, paramname, Expression[[ E ]])

push(han)

let obhan = Template[[ code ]]

pop()

obhan

Lefthandside[[.]] returns LValue:

[[ I ]] == searchChain('parent', top(), I)

[[ L . I ]] == searchChain('super', find Lefthandside[[ L ]], I)

# Starting from namespace han, searches for I in the namespaces linked

# together by linkname and returns the L-value where I resides:

searchChain(linkname, han, I) ==

if han != nil :

if member(I, han) :

(han, I)

else : searchChain(linkname, find(han, linkname), I)

else : raise Exception

[[ this ]] == if member('this', top()):

(top(), 'this')

else : raise Exception

[[ super ]] == searchChain('parent', top(), 'super')

===================================================

Important points:

There exists a super link only in namespaces that model objects, and the value of super is non-nil only when the namespace is constructed by the extends construction.

First, these languages do not save a parent link in the activation record of a method call. Lookups are done using this, even "static scoping" ones. (But see the next sentence.)

Second, storage for a suboject is allocated alongside the storage of its superobject in one contiguous piece. The Java compiler uses this layout to precompute static-scoping lookup of variables. Examples follow.

Java uses virtual scoping for outside-in method calls, L.I(...), as expected, but

for an inside-out call, I(...),

if method I is declared public, then I(...) is treated as

this.I(...), and

method lookup uses virtual scoping.

For

class D {

public void f() { System.out.println("D.f");}

public void g() { f(); }

}

public class C extends D {

public void f() { System.out.println("C.f"); }

}

the command, new C().g(), prints C.f,

showing that f is

treated as this.f().

If procedure I is labelled private, however, the Java compiler uses

the textual position of the call, I(...), to precompute

a static-scoping lookup.

For this example:

class D {

private void f() { System.out.println("D.f");}

public void g() { f(); }

}

public class C extends D {

public void f() { System.out.println("C.f"); }

}

The command, new C().g(), prints D.f.

The Java compiler precomputed the jump

to procedure f in class D, because the call, f(), appeared within

class D, and a private procedure f was declared there.

Beware of this exception to the rule.

Because there are only the local-global levels of variables, the Java compiler can precompute all variable lookups:

class D {

public int x = 2;

public int y = this.x + x;

public void f() { System.out.print(" D.f ");}

public void g() { f(); System.out.println(this.x); }

}

public class C extends D {

public int x = 3;

public void f() { System.out.print(" C.f "); }

}

The commands,

C ob = new C(); System.out.print(ob.x); ob.g(); D copy = ob; System.out.print(copy.x); copy.g();generate this output:

3 C.f 2 2 C.f 2The object, ob, was allocated like this:

this -> +---------

+0| C (origin class of this object)

+========

+1| x = 2

+-------- (D part)

+2| y = 4

+========

+3| x = 3 (C part)

+-------

Notice the offsets, +1, +2, +3, that index the three

fields in ob. (Method handles are not stored in the object; instead, the name of the class that constructed the object is stored

and used to locate the methods.)

The Java compiler reformats variable lookups

of format L.I into L.(+offset) so that

the compiled

code looks somewhat like this:

class D {

public int x = 2;

public int y = this.(+1) + x;

public void f() { System.out.print(" D.f ");}

public void g() { this.f(); System.out.println(this.(+1)); }

}

public class C extends D {

public int x = 3;

public void f() { System.out.print(" C.f "); }

}

...

C ob = new C(); System.out.print(ob.(+3)); ob.g();

D copy = ob; System.out.print(copy.(+1)); copy.g();

Notice that ob.x became ob.(+3), since ob has

data type C. And copy.x became copy.(+1),

since copy has type D.

So, even though ob and copy name the very same object,

they produce different results for variable lookups!

Be careful.

C++ and C# use semantics similar to Java's, but they require that the programmer insert the keyword, virtual, in a method's definition header line to allow mathod override, e.g., public virtual void myMethod(...){...}. The compiler remembers that all calls to myMethod, whether they look like myObject.myMethod(...) or myMethod(...), use virtual lookup.

A variation on procedures and methods is found in Python, which requires that each method defined within a class include this as an explicit parameter name, e.g., def myMethod(this, param1, param2, ...):.... As a consequence, a class method must be called with dot notation: myobject.mymethod(arg1,arg2,...) --- mymethod(arg1, arg2,...) is not allowed. Ordinary procedures, not declared in classes, do not require a this parameter. Method call and variable reference in Python match closely the formal semantics defined in this section.

Every object language has its own unique semantics for method definition, scoping, subclasses, and this. Be careful!

===================================================

T ::= { C } | T1 extendedby T2 | private D in T | L ( E )

===================================================

and the semantics goes like this:

===================================================

Template[[.]] updates heap and returns Handle:

Template[[ T ]] = evalTemplate(T, nil)

### evalTemplate(t, s) allocates template t using handle s as its super-link

evalTemplate( [[ { C } ]], superlink) ==

let newhandle = alloc{'parent' = top(), 'super' = superlink}

push(newhandle)

Command[[ C ]]

pop()

newhandle

evalTemplate([[ T1 extendedby T2 ]], superlink) ==

evalTemplate(T2, evalTemplate(T1, superlink))

evalTemplate([[ private D in T ]] , superlink) ==

push(alloc{'parent' = top()})

Declaration[[ D ]]

let han2 = evalTemplate(T, superlink)

pop()

han2

evalTemplate

[[ L ( E ) ]] == let (han, paramname, code) = callClosure('class', [[ L ]])

bind(han, paramname, Expression[[ E ]])

push(han)

let obhan = Template[[ code ]] # ignore superlink!

pop()

obhan

===================================================

evaluateTemplate(t, s) links the allocated object for template t

to the object's super-object, s. This makes the semantics of mixins,

T1 extendedby T2, truly simple --- it is two objects linked together.

The usual form of inheritance,

I(...) extendedby {C}, is a simple instance of a mixin.

The multiple inheritance of procedure p that appears to arise in x's object in

class C(): {proc p(...):...};

class D(): C() extendedby {proc q(...):... p(...)...};

var x = new {proc p(...): ...} extendedby D()

is resolved by the above semantics in copy-rule style: x.q()

calls procedure p in class C.

This matches the expansion of x's body into

var x = new {proc p(...): ...}

extendedby

({proc p(...):...} extendedby {proc q(...):... p(...)...})

The stack-heap machine model we have used is typical for object languages. If we are less dogmatic, we can dispense with the activation stack by adding a parameter to the semantic functions that is the handle to the active namespace. The parameter has the same value as did the top of the activation stack.

Here is the core language from the beginning of these Notes redefined with the active-namespace

parameter. The semantics gets simpler because there are no push-pop-top actions:

===================================================

P ::= T

C ::= var I = E | L = E | C1 ; C2 | while E : C end

E ::= N | ( E1 + E2 ) | L | new T

T ::= { C }

L ::= I | L . I

Program[[.]] updates heap:

[[ T ]] == Template[[ T ]](nil)

Command[[.]](handleToActiveNamespace) updates heap:

[[ var I = E ]](a) == bind(a, I, Expression[[ E ]](a))

[[ L = E ]](a) == bind(Lefthandside[[ L ]](a), Expression[[ E ]](a))

[[ while E : C end ]](a) == if Expression[[ E ]](a) != 0 :

Command[[ C ]]

Command[[ while E : C end ]]

else : (skip)

[[ C1 ; C2 ]](a) == Command[[ C1 ]](a)

Command[[ C2 ]](a)

Expression[[.]](handleToActiveNamespace) updates heap and returns Denotable:

[[ N ]](a) == return int(N)

[[ E1 + E2 ]](a) == Expression[[ E1 ]](a) + Expression[[ E2 ]](a)

[[ L ]](a) == find (Lefthandside[[ L ]](a))

[[ new T ]](a) == Template[[ T ]](a)

Template[[.]](handleToActiveNamespace) updates heap and returns Handle:

[[ { C } ]](a) == let newhandle = allocate {'parent' = a}

Command[[ C ]](newhandle) # use new namespace for C

newhandle

Lefthandside[[.]](handleToActiveNamespace) returns LValue:

[[ I ]](a) == searchStatic(a, I)

where searchStatic(thishandle, I) ==

if thishandle != nil :

if member(I, thishandle) :

(thishandle, I)

else : searchStatic(find(thishandle, 'parent'), I)

else : raise Exception

[[ L . I ]](a) == let han = find (Lefthandside[[ L ]](a))

if member(I, han) :

(han, I)

else : raise Exception

===================================================

The semantics of procedures gets simpler, too. Here is the static-scoping

semantics:

===================================================

Declaration[[ proc I1 ( I2 ) : C ]](a) ==

bind(a, I1, alloc{'type'= 'proc', 'code'= [[ C ]], 'parent'= a)

Command[[ L () ]](a) ==

let (code, parentlink) = findClosure('proc', Lefthandside[[ L ]], a)

Command code parentlink

===================================================

as does the semantics of blocks and private definitions.

What we have developed is a Scott-Strachey denotational-style semantics based on namespace algebra.

Exercise: Revise the semantics definitions for the templates of the language with classes and mix-ins,

so that Template[[ T ]](a)(superlink) allocates the object(s) defined by

T and returns the corresponding entry handle. This would make the

semantics go as follows:

Program[[ T ]] == Template[[ T ]](nil)(nil)

and

Expresson[[ new T ]](a) == Template[[ T ]](a)(nil)

and

Template[[ { C } ]](a)(superlink) ==

let newhandle = alloc{'parent' = a, 'super' = superlink}

Command[[ C ]](newhandle)

newhandle

and so on.